J’affirme ici que la non-qualité a des effets beaucoup plus graves sur la vie de ces populations. J’affirme que non, nous ne sommes pas égaux devant la non-qualité et que oui, si l’on se soucie vraiment de ces personnes, il est de notre devoir de proposer des contenus, des services, accessibles, sûrs, faciles à utiliser, simples, fiables, sobres et performants.

In this post, I want to look at ways to help mitigate and work around [the fact that site-speed is nondeterministic & most metrics are not atomic]. We’ll be looking mostly at the latter scenario, but the same principles will help us with the former. However, in a sentence:

🧞 Phenomenal Cosmic Powers, Itty Bitty Living Space

With both solutions measuring user experience metrics, it is natural to assume that they should be equivalent. It can be confusing when we see differences. This guide will explain why that can happen, and offers suggestions for what to do when the numbers do not align.

Priority Hints are a newly released browser feature, currently available in Chrome and Edge, that give web developers the option of signaling relative load-time priorities of significant page resources. These hints are declared by way of a new "fetchpriority" attribute in the page's HTML markup and are relatively easy to apply.

Early Hints is a recent addition to the HTTP Informational response (1xx) status codes. Information response codes are temporary status codes used to inform the client about the status of the request, while the server is processing the request to send the final response code (2xx-5xx).

Early Hints is specifically used to pass information on the resources that may be preloaded by the client. The client will eventually need these resources when it renders the final response from the server.

JavaScript, even if Cached, has significant cost on an end user's device; we'll discuss Disk, Parse, Compilation, IPC, and Bytecode Loading

CORS (Cross Origin Resource Sharing) enables web apps to securely access communicate across origins. But it comes with a performance penalty. In this tip, we'll discuss techniques for minimizing this penalty!

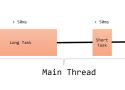

Since there is only one Main Thread responsible for all these Tasks, any Task that takes a particularly long time to execute will clog up the thread and degrade user experience.

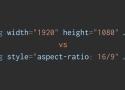

By default, an <img> takes up zero space until the browser loads enough of the image to know its dimensions.

To workaround this, we can use the width and height attributes, or the aspect-ratio CSS property. Which is best? Depend if the image is content or design!

Pour mettre en forme leurs messages sur les réseaux sociaux, beaucoup de gens utilisent des générateurs de texte en faux gras, faux italique, caractères fantaisistes et abusent des émojis. Cela constitue, la plupart du temps, un détournement d'usage des caractères Unicode. Ce n’est pas sans poser des problèmes d’accessibilité pour les personnes handicapées et, notamment, pour les personnes aveugles utilisant un lecteur d’écran. Le texte ne sera pas lu correctement et sera totalement incompréhensible. Démonstrations et explications.

Instead, hostile political discussions are the result of status-driven individuals who are drawn to politics and are equally hostile both online and offline. Finally, we offer initial evidence that online discussions feel more hostile, in part, because the behavior of such individuals is more visible online than offline.

Utiliser un lien HTML classique sera toujours la meilleure des solutions, la plus accessible, la plus utilisable, la plus fiable, la plus robuste, la plus maintenable.

Liste des outils pour sous-titrer vos vidéos et podcasts

Slower connections can result in CLS when lazy loading components that you wouldn’t see on wifi connections.

Either don’t lazy load the component at all or await for the js file to be loaded and mounted.

I want a feature called megablock that blocks a bad tweet, the bad tweet’s author, and every single person who liked the bad tweet, then sounds a brief alarm and makes your phone vibrate

Priority Hints fill the gap of prioritising resources that the browser already knows about against each other.

We are happy to announce that we are open sourcing our first performance quality model that is trained on millions of RUM data samples from around the world free to use for your own website performance optimizations!

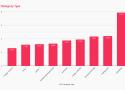

[…] the takeaway is pretty simple: text-based LCPs are the fastest, but unlikely to be possible for most. Of the image based LCP types, <img /> and poster are the fastest.

The <div> is the most versatile and used element in HTML. It represents nothing, while allowing developers to manipulate it into almost anything by use...