With both solutions measuring user experience metrics, it is natural to assume that they should be equivalent. It can be confusing when we see differences. This guide will explain why that can happen, and offers suggestions for what to do when the numbers do not align.

Priority Hints are a newly released browser feature, currently available in Chrome and Edge, that give web developers the option of signaling relative load-time priorities of significant page resources. These hints are declared by way of a new "fetchpriority" attribute in the page's HTML markup and are relatively easy to apply.

Early Hints is a recent addition to the HTTP Informational response (1xx) status codes. Information response codes are temporary status codes used to inform the client about the status of the request, while the server is processing the request to send the final response code (2xx-5xx).

Early Hints is specifically used to pass information on the resources that may be preloaded by the client. The client will eventually need these resources when it renders the final response from the server.

JavaScript, even if Cached, has significant cost on an end user's device; we'll discuss Disk, Parse, Compilation, IPC, and Bytecode Loading

CORS (Cross Origin Resource Sharing) enables web apps to securely access communicate across origins. But it comes with a performance penalty. In this tip, we'll discuss techniques for minimizing this penalty!

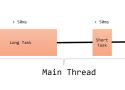

Since there is only one Main Thread responsible for all these Tasks, any Task that takes a particularly long time to execute will clog up the thread and degrade user experience.

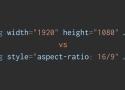

By default, an <img> takes up zero space until the browser loads enough of the image to know its dimensions.

To workaround this, we can use the width and height attributes, or the aspect-ratio CSS property. Which is best? Depend if the image is content or design!

Slower connections can result in CLS when lazy loading components that you wouldn’t see on wifi connections.

Either don’t lazy load the component at all or await for the js file to be loaded and mounted.

Priority Hints fill the gap of prioritising resources that the browser already knows about against each other.

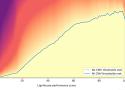

We are happy to announce that we are open sourcing our first performance quality model that is trained on millions of RUM data samples from around the world free to use for your own website performance optimizations!

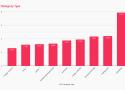

[…] the takeaway is pretty simple: text-based LCPs are the fastest, but unlikely to be possible for most. Of the image based LCP types, <img /> and poster are the fastest.

By using techniques that assess the performance impact of a build in relation to the performance characteristics (magnitude, variance, trend) of adjacent builds, we can more confidently distinguish genuine regressions from metrics that are elevated for other reasons (e.g. inherited code, regressions in previous builds or one-off data spikes due to test irregularities). We also spend less time chasing false negatives and no longer need to manually assign a threshold to each result — the data itself now sets the thresholds dynamically.

We see in the data that the presence of certain errors lead to actions of user frustration that have bottom line implications for the business serving the site. The two most prominent cases of this are reloads and abandonwments (page exits).”

Why? Because this is pretty hard to “understand” that the page exit or the reload in the SR.

Indeed, we just see the error at the very last second and boom, finish (or next replay start in case of reload)

Today in Lyon, France, was the We Love Speed conference. Its focus is on everything related to web performance. Even if the conference talks were only in French, I'll do this recap in English, to let more people learn from it.

In the new responsiveness metrics, we measure the latency of user interactions, how your customers navigate and act on your website, rather than individual events. A user interaction, such as tap (click), drag, and keyboard interaction, usually triggers multiple events.

"AMP Has Irreparably Damaged Publishers’ Trust in Google-led Initiatives", Sarah Gooding (@wptavern)

In summary, it claims that Google falsely told publishers that adopting AMP would enhance load times, even though the company’s employees knew that it only improved the “median of performance” and actually loaded slower than some speed optimization techniques publishers had been using.

It’s easy to get excited about new techniques for measuring and improving site speed but this focus on the technical side of performance can lead us to think of speed as a technical issue, rather than a business issue with technical roots.

Almost half of all pages that scored 100 on Lighthouse didn’t meet the recommended Core Web Vitals thresholds.

[…]

- If you're going to talk about the performance of a production site, use real-user data.

- If you're going to use a single number to cite a performance result, specify where that number falls in the distribution.

- When talking about real-user performance, be specific about the time period.

- If you do want to brag about your Lighthouse score or other lab results, do so in the context of the larger performance story.

Core Web Vitals is a measurable SEO ranking factor. This data study shows changes seen during the Page Experience Update July-Aug 2021

Render-blocking resources are a common hurdle to rendering your page faster. They impact your Web Vitals which now impact your SEO. Slow render times also frustrate your users and can cause them to abandon your page.